For decades, robots have lived almost exclusively in research labs, factories, and sci-fi movies. But by 2026, that boundary is finally breaking down. Thanks to rapid advances in artificial intelligence, hardware miniaturization, and falling production costs, a new era of Physical AI is emerging — one where robots are designed to operate in everyday human environments.

The shift marks a turning point not just for technology, but for how people live, work, and interact with machines.

What Is Physical AI?

Physical AI refers to artificial intelligence systems embedded in machines that can perceive, reason, and act in the real world. Unlike software-only AI models, Physical AI combines:

- Advanced perception (vision, audio, touch)

- Real-time decision making

- Autonomous movement and manipulation

- Continuous learning from physical environments

According to research published by NVIDIA Robotics, recent breakthroughs in simulation training and sensor fusion have dramatically reduced the gap between lab performance and real-world reliability.

Why 2026 Is the Breakout Year

Several trends are converging to make 2026 a tipping point for consumer robotics:

- More capable multimodal AI models

- Cheaper sensors, batteries, and compute chips

- Massive investment from Big Tech and startups

- Growing demand for automation in daily life

Firms tracked by CB Insights show record venture funding flowing into humanoid robots, home assistants, and service robotics heading into 2026.

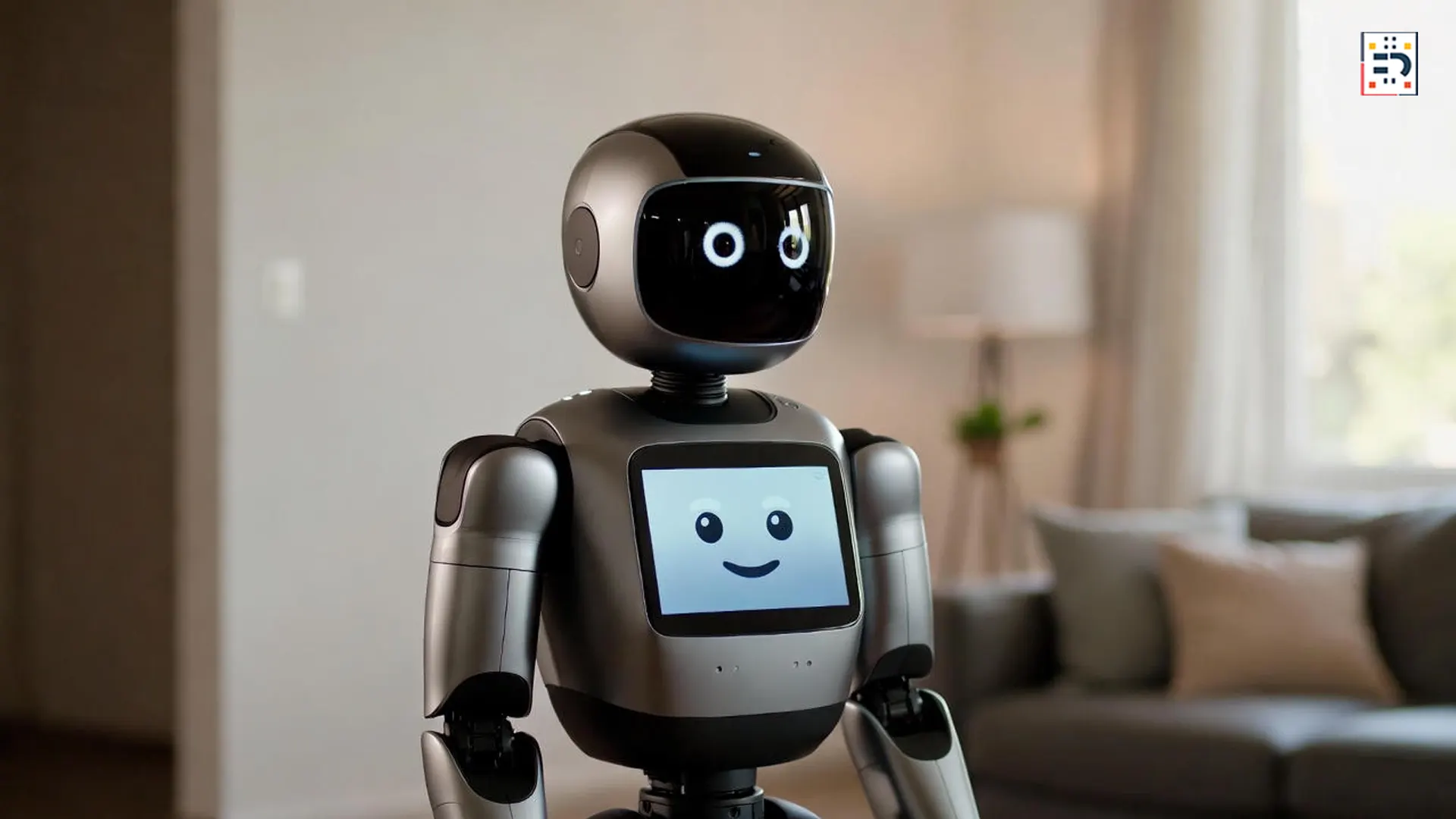

From Factory Floors to Family Rooms

Industrial robots have long dominated manufacturing, but the next wave is personal. Companies are now targeting:

- Household assistance (cleaning, organizing, carrying)

- Elder care and assisted living

- Home security and monitoring

- Companionship and education

Platforms such as Boston Dynamics and emerging consumer robotics startups are adapting industrial-grade mobility into safer, friendlier home designs.

The Role of Large AI Models

What truly unlocks Physical AI is the rise of large, general-purpose AI models capable of understanding language, vision, and context. Instead of hard-coded instructions, robots can now:

- Follow natural language commands

- Adapt to unfamiliar environments

- Learn new tasks without reprogramming

Researchers at Google DeepMind Robotics have demonstrated robots that generalize skills across tasks — a key requirement for home use.

Why Consumers Are Finally Ready

Earlier generations of home robots struggled with limited functionality and high prices. In contrast, 2026-era Physical AI benefits from:

- Smart home integration

- Improved safety and reliability

- Subscription-based pricing models

- Clear use cases beyond novelty

Analysts at McKinsey predict consumer robotics adoption will mirror early smartphone adoption once value becomes obvious and prices normalize.

Risks, Ethics, and Trust

Despite the excitement, Physical AI introduces new concerns around privacy, safety, and accountability. Robots operating in private spaces raise questions about:

- Data collection inside homes

- Security vulnerabilities

- Over-reliance on automation

Regulators and standards bodies are beginning to address these risks, but public trust will be a decisive factor in adoption.

What the Living Room of 2026 Might Look Like

By 2026, early adopters may see robots performing routine tasks alongside humans, much like smart speakers did a decade earlier. While mass adoption will take time, the transition from lab prototypes to consumer products is already underway.

Physical AI is no longer a distant future concept — it’s becoming a household technology category.

The year 2026 will likely be remembered as the moment robots stopped being experimental tools and started becoming everyday companions.

As Physical AI moves from controlled labs into living rooms, the real challenge won’t be whether robots can work — but whether society is ready to live with them.

#PhysicalAI #Robotics #AIInnovation #FutureTech #HomeRobots #ArtificialIntelligence #ConsumerTech