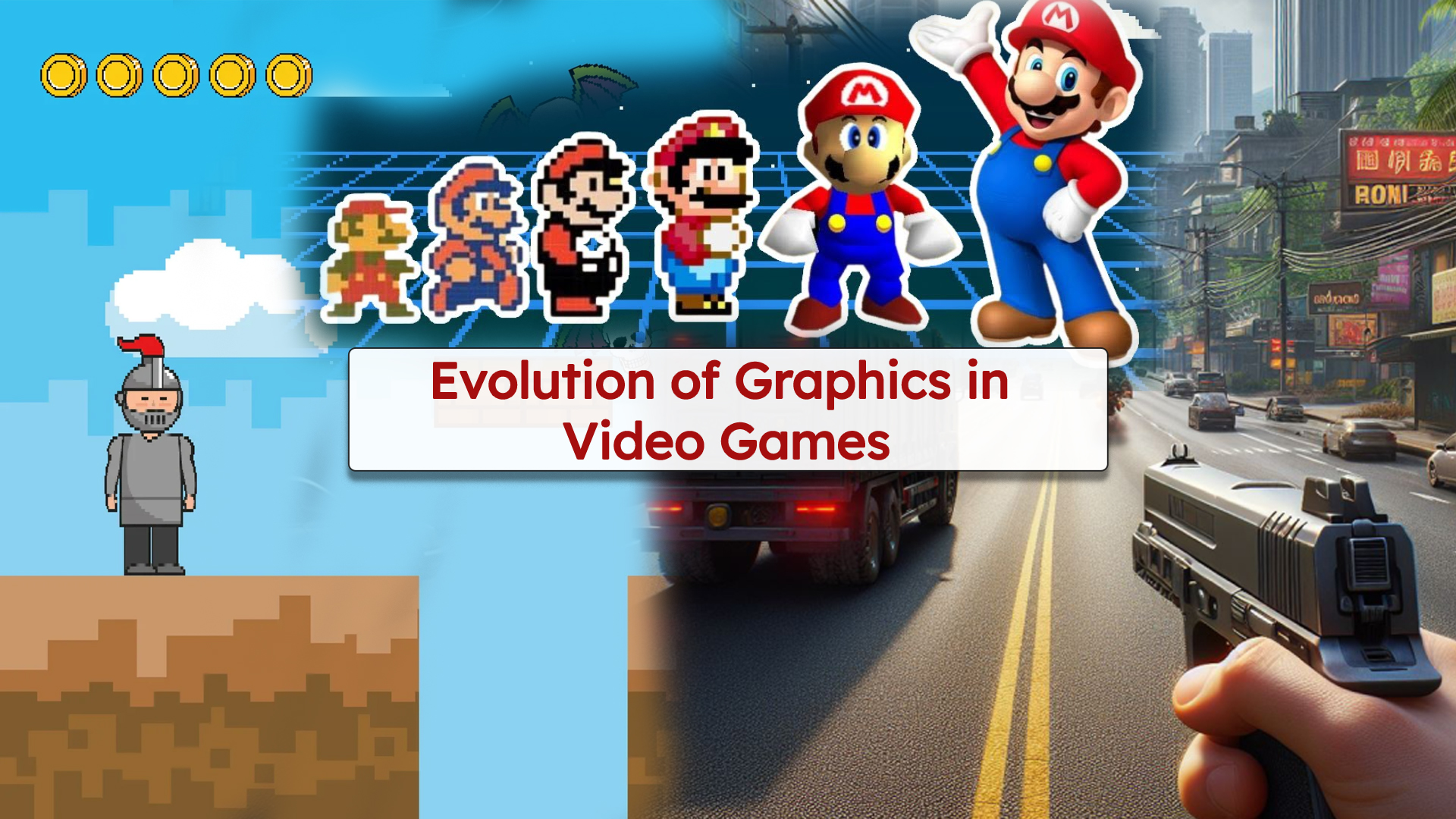

Video games have come a long way since the days of pixelated plumbers and chunky sprites. What once were simple blocks of color have evolved into breathtakingly realistic visuals that rival Hollywood CGI. The journey from 8-bit to photorealism is not just a story of better graphics cards—it’s a tale of artistic innovation, technical breakthroughs, and an industry constantly pushing the boundaries of what’s possible.

The 8-Bit Era: Where It All Began

The early 1980s marked the golden age of 8-bit gaming. Titles like Pac-Man, Donkey Kong, and Super Mario Bros. used limited palettes and low-resolution sprites to deliver engaging gameplay. Despite the simplicity, these games had charm and character. Developers learned to use constraints creatively—turning blocks into characters and beeps into music.

The 8-bit era wasn’t just about graphics; it was about establishing the visual language of video games. Health bars, score counters, and side-scrolling environments became standard, laying the foundation for future design.

The 16- and 32-Bit Leap: More Color, More Detail

The late ’80s and early ’90s brought the 16-bit and 32-bit revolutions, thanks to consoles like the Sega Genesis and Super Nintendo. Graphics became more detailed, animations smoother, and worlds richer. This era introduced us to iconic visual styles—think The Legend of Zelda: A Link to the Past or Street Fighter II—that are still loved today.

This period also saw the rise of parallax scrolling, mode-7 graphics, and early experiments with 3D, hinting at what was to come.

The Polygonal Revolution: 3D Takes Over

The mid-to-late ’90s were a game-changer. With the advent of powerful consoles like the Sony PlayStation and Nintendo 64, 3D graphics became the new standard. Characters and environments were now rendered in polygons, allowing for full movement in three-dimensional space.

Games like Tomb Raider, Super Mario 64, and Final Fantasy VII redefined what players expected from visual immersion. Though rough around the edges by today’s standards, these early 3D games were revolutionary in creating depth, scale, and cinematic storytelling.

Early 2000s: Realism Begins to Bloom

With the PlayStation 2, Xbox, and GameCube, developers began chasing realism in earnest. Lighting, shadows, and texture mapping saw major advancements. Characters looked more human, environments more believable.

This era also introduced more complex animation systems, ragdoll physics, and facial expressions. Games like Metal Gear Solid 2, Halo: Combat Evolved, and Half-Life 2 pushed graphical boundaries and set new standards.

HD Era to Today: Photorealism and Beyond

The jump to high definition with the Xbox 360 and PlayStation 3 marked a major milestone. Suddenly, characters had pores, environments had weather systems, and cutscenes blurred the line between game and movie.

Today, with current-gen hardware like the PlayStation 5, Xbox Series X, and powerful PCs, we’ve entered an age of near-photorealism. Real-time ray tracing, motion capture, 4K textures, and advanced physics engines contribute to visuals so lifelike, it’s hard to distinguish game from reality. Titles like Red Dead Redemption 2, Cyberpunk 2077, and The Last of Us Part II showcase just how far we’ve come.

What’s Next?

As technology continues to evolve, so do the possibilities. The future may bring real-time AI-generated graphics, even more immersive VR experiences, and hyper-realistic virtual worlds indistinguishable from real life. But perhaps the most exciting part? Despite all the advancements, the core of great game visuals still lies in artistic vision and creative storytelling.

From 8-bit blocks to digital masterpieces, video game graphics have transformed dramatically. But no matter how realistic things get, it’s the magic behind the pixels that keeps us playing.