Las Vegas, CES 2026 — Nvidia may have just delivered the biggest technology inflection point since generative AI entered the mainstream. During its CES 2026 keynote, CEO Jensen Huang unveiled Alpamayo, a new family of AI models designed for robots and autonomous machines—calling it the long-awaited “ChatGPT moment for physical AI.”

The announcement positions Nvidia at the center of the next AI revolution: systems that don’t just generate text or images, but reason, decide, and act in the real world.

What Is Nvidia Alpamayo?

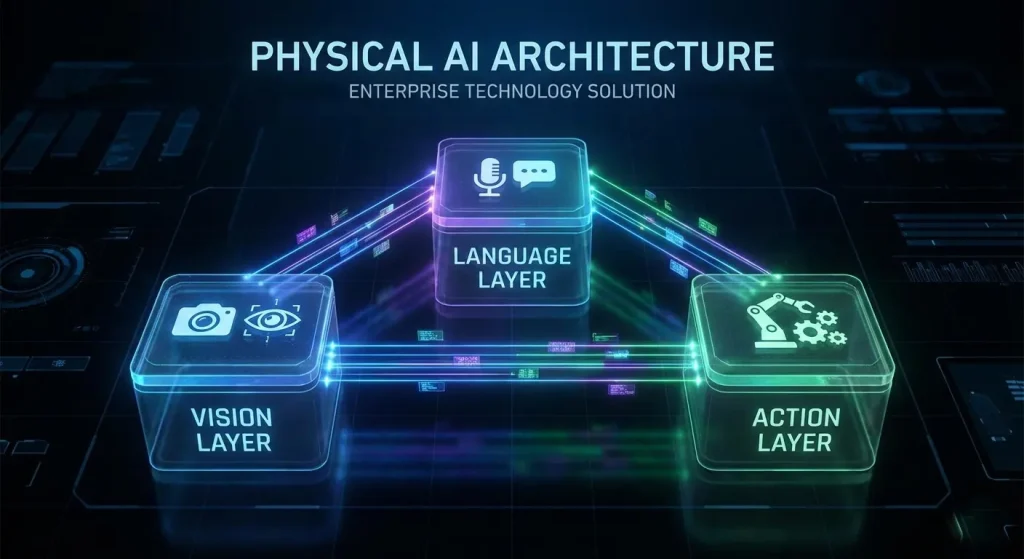

Alpamayo is an open, modular AI platform that blends vision, language, and action into a single reasoning system. Unlike traditional robotics software—which separates perception from decision-making—Alpamayo enables machines to think through problems step by step.

According to Nvidia’s official announcement, the Alpamayo ecosystem includes:

- Alpamayo-1 – A vision-language-action (VLA) model capable of chain-of-thought reasoning

- AlpaSim – A high-fidelity simulation environment for training autonomous agents

- Open Physical AI Datasets – Real-world driving and robotics data for edge-case learning

Nvidia detailed the release through its investor relations platform, emphasizing safety, transparency, and explainability as core design goals.

Why This Is Being Called the “ChatGPT Moment” for Robotics

When ChatGPT launched, it fundamentally changed how people interacted with AI. Nvidia believes Alpamayo will do the same—but for robots, autonomous vehicles, and physical machines.

During his CES keynote, Jensen Huang explained that physical AI requires three things:

- Understanding the environment

- Reasoning about consequences

- Executing actions safely

Alpamayo is designed to handle all three in a unified model, allowing machines to explain why they made a decision—an essential requirement for trust and large-scale deployment.

This shift aligns with Nvidia’s broader push into physical AI and robotics platforms, a category the company sees as the next trillion-dollar market.

Real-World Adoption: Automakers and AV Developers

Nvidia didn’t just announce research—it showcased real production use cases.

One of the headline partnerships revealed at CES 2026 involves Mercedes-Benz. The automaker confirmed that upcoming CLA models in the U.S. will integrate Alpamayo-powered autonomy features, building on Nvidia’s DRIVE platform.

Other companies exploring the Alpamayo ecosystem include:

- Lucid Motors

- Jaguar Land Rover

- Uber Autonomous Technologies

- Berkeley DeepDrive

Industry analysts speaking to Barron’s noted that Alpamayo could accelerate progress toward Level-4 autonomous driving, particularly in geo-fenced urban environments.

Beyond Cars: The Robotics Opportunity

While autonomous vehicles dominated headlines, Alpamayo’s implications extend far beyond transportation.

Nvidia demonstrated how the same reasoning models can be applied to:

- Warehouse robots navigating dynamic environments

- Humanoid assistants performing multi-step tasks

- Industrial automation with real-time decision making

According to Nvidia’s engineering blog, simulation-first training—powered by Omniverse-based environments—will be critical to scaling robotics safely.

Market Reaction and Industry Skepticism

Despite the excitement, not everyone is convinced the robotics breakthrough is immediate.

Some investors argue that Nvidia’s valuation is still driven primarily by data-center GPUs and generative AI workloads. Robotics, they say, may take longer to monetize at scale.

Competitors like Tesla have also downplayed third-party autonomy stacks, suggesting that real-world edge cases remain a massive technical challenge.

Still, analysts interviewed by Associated Press agree that Nvidia has positioned itself as the foundational infrastructure provider for physical AI—much like it did for generative AI.

What Alpamayo Means for the Future of AI

The unveiling of Alpamayo marks a clear shift in the AI narrative:

- From digital to physical — AI moves off screens and into the real world

- From prediction to reasoning — machines explain decisions, not just outputs

- From closed to open — Nvidia embraces open models and datasets

If ChatGPT made AI conversational, Alpamayo could make it operational.

As CES 2026 comes to a close, one thing is certain: the next chapter of artificial intelligence will be lived, driven, and walked—quite literally—by machines that can think.

#Nvidia #CES2026 #Robotics #PhysicalAI #ArtificialIntelligence #FutureOfAI #AutonomousVehicles #TechTrends #DigitalInnovation