A new contender has entered the AI race—and it’s built on a radically different promise. The founder of Signal, the messaging app long celebrated for its privacy-first philosophy, has officially launched Confer, an end-to-end encrypted artificial intelligence platform.

In a world increasingly shaped by deepfakes, data breaches, and AI surveillance fears, Confer’s arrival raises a bold question: could privacy-focused AI disrupt the dominance of platforms like ChatGPT?

What Is Signal’s Confer?

Confer is positioned as a privacy-first AI assistant designed with the same principles that made Signal a trusted communication tool for journalists, activists, and security-conscious users.

Unlike traditional AI platforms that rely heavily on cloud data storage, Confer emphasizes end-to-end encryption, ensuring that user prompts, conversations, and outputs remain inaccessible to third parties—including the platform itself.

Why Privacy Is the Biggest Selling Point of 2026

As artificial intelligence becomes deeply embedded in daily life, public trust is eroding. High-profile data leaks and misuse scandals—frequently covered by outlets like Wired and The Guardian—have made users increasingly wary of how AI systems handle personal information.

In response, privacy is no longer a niche feature—it’s a competitive advantage. Confer is launching at a moment when users are actively searching for alternatives that don’t trade convenience for surveillance.

How Confer Differs From ChatGPT

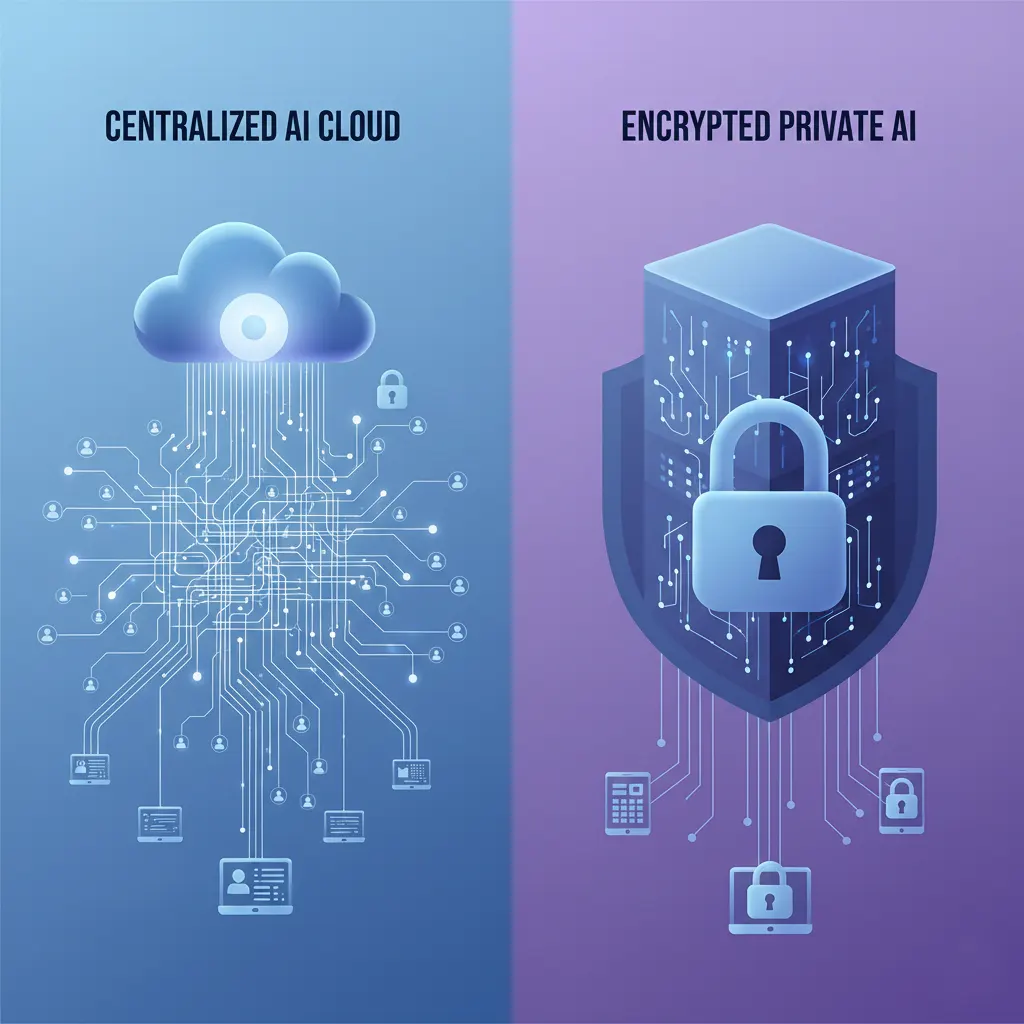

While ChatGPT has become synonymous with generative AI, its scale comes with trade-offs. Large AI models typically depend on centralized infrastructure and data logging to improve performance.

Confer takes a different approach:

- End-to-end encrypted AI conversations

- No long-term storage of user prompts

- Minimal metadata collection

- Open privacy architecture aligned with Signal’s ethos

This design may appeal to professionals in journalism, law, healthcare, and enterprise environments where confidentiality is critical.

The Deepfake and Data Leak Era

The rise of AI-generated misinformation has intensified concerns around trust. Deepfakes, voice cloning, and synthetic media have made it increasingly difficult to verify authenticity online.

Organizations like the Electronic Frontier Foundation have repeatedly warned that AI systems lacking strong privacy controls could amplify these risks.

By minimizing data retention, Confer positions itself as a tool that reduces the surface area for abuse.

Will Privacy-First AI Go Mainstream?

The biggest challenge facing Confer is scale. Privacy-centric platforms often struggle to match the speed, features, and ecosystem integration of tech giants.

However, as regulatory pressure increases and users become more privacy-literate, analysts believe demand for secure AI tools will grow—especially in regions enforcing strict data protection laws.

Publications such as Bloomberg Technology note that the AI market is beginning to fragment, with specialized tools outperforming general-purpose platforms in specific use cases.

Is This the End of ChatGPT’s Dominance?

It’s unlikely that Confer will replace ChatGPT overnight. But dominance doesn’t have to disappear to be challenged. Just as Signal never replaced WhatsApp—but redefined secure messaging—Confer may carve out a powerful niche in privacy-sensitive AI use.

In 2026, the AI race is no longer just about intelligence. It’s about trust.

Signal’s Confer represents a meaningful shift in the AI conversation. As concerns around surveillance, data ownership, and digital manipulation grow, privacy-first AI may become less of an alternative—and more of a necessity.

Whether or not Confer dethrones ChatGPT, it signals a future where users demand more control over how AI interacts with their data.

#PrivacyFirstAI #SignalConfer #EndToEndEncryption #AIPrivacy #ChatGPT #FutureOfAI #Deepfakes #DataSecurity #TechTrends